Revista Electrónica de Investigación Educativa

Vol. 10, Num. 2, 2008

Evaluation of teacher-training programs in

cooperative learning methods, based on

an analysis of structural equations

José Manuel Serrano

(1)

serrano@um.es

Tiburcio Moreno Olivos

(2)

tiburcio34@hotmail.com

Rosa María Pons Parra

(1)

rmpons@um.es

Rosamary Selene Lara Villanueva

(2)

rselenelara@hotmail.com

1

Departamento de Psicología Evolutiva y de la Educación

Universidad de Murcia

Campus Universitario de Espinardo s/n, C. P. 30071

Murcia, España

2

Instituto de Ciencias Sociales y Humanidades

Área Académica de Ciencias de la Educación

Universidad Autónoma del Estado de Hidalgo

Carretera Pachuca-Actopan, Km. 4, C. P. 42160

Pachuca, Hidalgo, México

(Received: August 25, 2007;

accepted for publishing: May 2, 2008)

Abstract

The present study is focused on the design of the assessment of programs for teacher training. The authors emphasize the relevance of the assessment of this kind of programs and its development by models of structural equations. There are specifically postulated four exogenous variables, which coincide with four segments of a program, and an endogenous variable, which refers to the results, expressed these in terms of adequacy, productivity, efficacy, efficiency, and effectiveness. Both the structural and the measurement aspects are totally developed in the corresponding causal model.

Key words: Cooperative learning, causal models, structural equations models, teacher education, curriculum evaluation.

Introduction

cooperative learning

Methods of cooperative learning are systematic instruction strategies that can be used in any course or academic level, and can be applied in the majority of classes in school curriculums. All these methods have two characteristics in common. First, they allow the class to be divided into small, heterogeneous groups, representative of the total classroom population. Second, they attempt to have the members of these groups maintain a positive interdependence through applying the principals of group reward and a particular, determined structure of the task necessary for reaching the proposed objectives, which are usually formulated from two aspects: individual and group (Serrano and Gonzalez-Herrero, 1996).

Although cooperative organization of the classroom has a long history (Serrano and Ruiz Pons, 2007), it was not until the second half of the last century that these instructional strategies became increasingly established. They have multiplied in such profusion that today, scientific works on the implementation of cooperative learning number nearly one quarter of a million pages of printed literature, and more than ten thousand studies. This has led researchers to make numerous meta-analyses in the attempt to bring some order to this flood of results.

Based on the results obtained by the first generation of studies, in which the focus was on comparing the performance of students in the three possible situations of classroom organization (cooperation, competition and individualization), one may conclude that if the teacher’s role in any of the three ways, in the methods of cooperative learning s/he is presented as the basic element for the students’ socio-academic achievement. In effect, although the methods of cooperative learning may be incorporated into any paradigm and in the environs of any instructional focus, it is no less certain that it is in the cognitive paradigm (in its constructivist aspect) and at the heart of the situated learning focus (at least from the perspective of the teacher’s role), that this type of methodology is best brought into play (Serrano and Pons, 2006).

On the one hand, it is in the constructivist option of the cognitive paradigm that the educational purposes which address the development of learning (learning to learn) and thinking (learning to think) are most striking, and it is therefore necessary that the teacher relate the content to the experiences and everyday knowledge of the students, excite cognitive imbalances and develop the role of counselor. In this cognitive option the student is considered to be more than a receiver (informationism) or actor (conductism), and is a co-author of the teaching and learning processes.

On the other hand, in the instructional approach called situated instruction (Jones, 1992), the activity of the teacher is to +- provide students with multiple knowledge bases and learning opportunities, thus establishing an organization of human resources and materials so that learners get different perspectives on the same theme; to help the students learn (both content and strategies), through molding their thinking and learning processes, and activating their prior knowledge and skills.

If we take into account that in the constructivist paradigm the unit of analysis of the teaching and learning process is the interactive triangle, and that in the modification effected by some of us (Serrano and Pons, 2008), the teacher is the center of gravity (barycenter) of this triangle, it is easy to deduce that the social and academic achievements which can be accomplished by means of a cooperative organization of the classroom will depend, in great part, on the appropriate training of this educational expert. In most countries, specific instruction in this type of methodology is not included early-on in teacher-training curriculums; it therefore seems clear that we must resort to ongoing instruction to fill the gaps created during the initial phase of schooling.

I. Training teachers in methods of cooperative learning

The training of teachers in methods of cooperative learning has been converted into one more element of the work underlying this research topic which, since the 70s, has produced in hundreds of publications, both in general aspects of training (Dettori, Gianneti and Persico, 2006), and in specific areas of the curriculum (Golightly, Nieuwoudt and Richter, 2006; Leikin, 2004). Although each of these methods represents a unique solution for how to organize the teaching activities in the classroom, due to their relative novelty, it is necessary for the methods to be organized using a format that will make analysis possible (for research purposes) and adapted to a specific teaching and learning situation (instructional purposes) such as the instructor’s teaching style, to classrooms with integrated special-needs students, to learning environments with integrated ethnic minorities, etc. In this sense we can say that a format should meet the following requirements (Serrano and Calvo, 1994):

- It should not only be a guide for the selection of an appropriate method, but it should also help the planner of the instruction to make adequate descriptions of already-selected methods which can confront particular instructional objectives, when these are especially needed;

- It should cover a wide range of small-group activities, and should be flexible enough to allow the creation or adaptation of activities tailored to the needs and idiosyncratic characteristics of each classroom;

- It should be useful for the development of teaching materials and a guide for group work, for the material which should be considered by the groups, and for the evaluation instruments;

- It must include descriptive categories that differentiate between the different methods, and focus on those features of the small-group activities that have been validated through significant research, directly related to the students’ learning and to the group’s productivity.

This need for establishing a system of categories led Kagan (1985) to make an analysis of the cooperative learning methods most applied and studied. He identified 25 dimensions, which he grouped into six categories:

1) Philosophy of education;

2) Nature of learning;

3) Nature of cooperation;

4) Role played by the students and types of communication;

5) Role played by the teacher;

6) Evaluation.

Based on the identification of these categories, almost all training programs are beginning to develop under the supposition that a broad command of these categories on the part of teachers allows for the proper application of cooperative methodology in the classroom. In this sense, the general objectives of a teacher-training process in cooperative-learning methods are determined by three basic elements of the constructivist approach:

- The student’s constructive activity, which gives access to a set of processes (such as Piagetian explanations of the construction of knowledge through processes of increasing equilibration or the socio-conflict hypothesis of the Geneva School) which are today considered as key elements for understanding the interactive processes established between the teacher, the student and the educational content;

- The scaffolding processes—introduced by Bruner, and based, on the one hand on Vygotsky’s law of double formation of the higher psychological processes, and on the other, as a direct consequence of this, on considering education as a creative and driving force of development,—which refer to the need for and “adjustment” of the teacher’s action toward the difficulties the students encounter during the resolution of educational tasks, so that in the interactive process there becomes possible the creation of “Zones of Proximal Development which allow a coherent interiorization of notions;

- The sociolinguistic contexts—based on the work on ethnographic communication, ethno-methodology applied to education, educational discourse analysis and the analysis of communication in the classroom—which allow a response, not only to the question of how language is learned, but also to that of how people learn through the use of language, i.e. knowing how language works in the interactions between the teacher and student, and between peers.

However, our experience in training teachers in methods of cooperative learning (Calvo Serrano, Gonzalez-Herrero and Ato, 1996) allows us to say that the greatest deficit is related to the role of the teacher. In the teacher’s specific activity throughout the development of a cooperative-learning method, we can find a wide range of behaviors that will depend on the role of the cooperative group, and therefore will be different for each of the methods. In this type of methodology, the students take roles that traditionally were reserved for the teacher; therefore, teachers who use cooperative learning must also take on new roles. In some methods, the teacher is available to work individually with students or with groups, while the rest of the class maintains tutoring relationships. In those methods in which there are “groups of experts” or “learning groups”, the teacher has time for these groups to consult with him/her, and in this way, facilitates learning the material. In the methods where the students assume the responsibility of what and how they are going to carry out the teaching/learning process, the teacher is even more “liberated”, and normally speaks with the groups, suggests ideas and study possibilities, thereby assuring an equitable and rational division of labor within the groups.

All these activities should be conducted within the three-dimensional framework mentioned above: respecting and taking advantage of the student’s constructive activity, ensuring the scaffolding process, and knowing and making known the basic educational rules for classroom talk, with the goal of intervening and organizing activities, while facilitating and promoting the process of negotiating meanings around what is being done and said. But it must be always borne in mind that the contexts of interaction are constructed by the people involved in educational activity, and that communicative exchanges cannot be produced under the principle of “all or nothing”, or that they are either produced under an absolute respect for the rules, or not at all. The reality of the classroom is much more complex, and it would be an error to contemplate the interaction between teacher and students as the “dramatization” of a script with previously-established roles.

This change in roles adapted to a new system of rules requires the training of teachers in the methods of cooperative learning. Moreover, educators themselves need this training, because despite the intrinsic and extrinsic value they attach to these techniques, they do not seem to be well informed, and are less well prepared to implement the required effectiveness. In this regard, coming from the teachers themselves there have been proposals, in which they specify the need to bear in mind that in the programs of initial and ongoing training, at least two points should be addressed.

First, and related with ongoing training, there should be work done on three types of classroom structure, while at the same time giving information on which of these types is the most desirable in certain circumstances. Also, emphasis should be placed on the structure of cooperative rewards; the following three issues should be made very clear: how to group students, how to create positive interdependence among them, and finally, what the teacher’s function and behavior should be.

Second, and in relation to the initial training, cooperation should be modeled in the classes of future teachers. This can be easily done, since it is as powerful a way of learning with young people as with adults. The modeling of cooperation will not only offer the future teacher a deeper knowledge of the use of these strategies, but will also help to apply them to their work with staff peers. In addition, this situation should be applied to the case of ongoing training; i.e., teachers should be trained in methods of cooperative learning on the basis of a cooperative-training organization.

Along this same line, the need for generalizing teacher-to-teacher cooperative learning has been pointed out. If teachers desire to learn from one another, they should interact in a cooperative context, since this has been shown to be equally effective with adults. In one of the meta-analyses carried out by the Minnesota School (Johnson and Johnson, 1990), 30% of the empirical studies (133) used sample groups of adults for research on performance. This, in addition, makes possible more positive interpersonal relationships, and generates higher levels of self-esteem. It should not be surprising, then, that very concrete learning experiences among teachers are being experienced (as in the Collegial Support Group), and could easily be incorporated without substantial changes, into teaching programs (primary, junior high and high school), and into university departments and centers.

II. The evaluation of teacher-training programs

Given the necessity of teacher training in methods of cooperative learning, having been established the minimum for the contents the program must contain, and having been established the methodology which much be used for carrying out the process, there arises the question of how to evaluate this training process—i.e., how to assess the program’s efficacy, efficiency and effectiveness.

The evaluation of programs has a small but multitudinous history that begins in the decade of the 40s (Tyler, 1950). It has been profiled throughout this time, but more especially during the last three decades (Alvira, 1991 Cronbach et al. 1980, Ibarra, Leininger and Rosier, 1984; Ichimura, and Linton, 2005; Posavac and Carey, 1985, Wang and Walberg, 1987) as having a specific methodology of terminology, specific conceptual knowledge, defined procedures, and identified tools of analysis, as well as characteristic stages and processes. In this sense, the most peculiar feature of this transformation process may have been a conceptual/procedural glide that has taken us from the view of programming as an act a priori, and evaluation as an act a posteriori, to a concept of the inseparability of the two processes, as Aristotle said in his Politics: “We will not acquire, not now and not ever, a deep knowledge of those things whose growth we have not observed from the beginning”. In this way, evaluation and programming are two processes that today are considered so interwoven that it is impossible to see them as isolated or static elements, since they constitute an authentic dialectical pair.

We define the evaluation of programs as: the emission of value judgments on the conception, implementation and results of a program, based on the empirical knowledge provided by the systematic information obtained through the use of objective research instruments; with the goal of making it possible, through the use of scientific methods, for these merit or value judgments to guarantee the production of the most accurate assessments, matched as closely as possible to the viability, satisfaction, effectiveness and efficiency of the program.

This is a personal definition, and many others may be admitted as equally (or even more) acceptable, so that it is possible to speak of other models of evaluation. In this sense, since Ralph Tyler (1950) developed for the decade of the forties, his model of evaluation by objectives, there have been springing up other evaluative models answering to new reconceptualizations of program evaluations, such as those of Stake (1998), Suchman (1967; 1990), Scriven (1967), Stufflebeam (2001a; 2001b), etc.

However, our definition impels us to accept a specific model of evaluation, since that definition leads us directly and without apology to what is known as the segmented evaluation of programs (Municio, 1992)

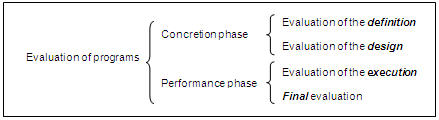

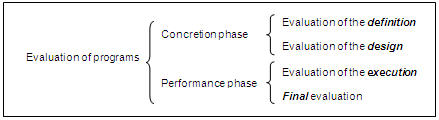

Indeed, we must consider that a program is a document of intent, technically drawn up, consisting of a plan of actuation at the service of valuable and necessary goals, which is articulated according to a bietapic process (concretion and realization), and which presents four well-defined segments: definition, design, execution and conclusion. Therefore, the segmented evaluation of programs is a set of actions for estimating the value of each and every one of the segments that make up the program (Tallmadge, 1982; Writhen, 1990), together with its structure and coordination to reach predicted goals and objectives, by analyzing its relative efficiency, effectiveness, efficiency (cost/benefit and cost/efficacy analyses), its productivity (cost/benefit and cost/efficacy analyses), and its appropriateness (see Figure 1).

Therefore, the evaluation of targeted programs is a set of actions to estimate the value of each and all of the segments that make up the program (Talmage, 1982; Worth, 1990) and its structure and coordination to achieve goals and the objectives set by the analysis of their relative efficiency, effectiveness, efficiency (cost-benefit analysis and cost-effectiveness), productivity (performance analysis) and appropriateness (see Figure 1).

Figure 1. Actions in the evaluation of programs

The manifest difference between the component parts of a program requires that the evaluation of each of the segments be constructed on two well-differentiated axes: the substantive and the methodological.

The substantive axis is the differentiating axis of the programs and their evaluations.

The substantive axis is composed of five elements that allow one to answer the questions, “Why? How? When? For what?” and “For whom?” The first two elements of this substantive axis make reference to what and how, that is to say, to the content previously established in the program, its viability and the strategies it uses. The third elements refers to the for what—which means, to the reason for this program. The fourth elements refers to the moment of performance, or the when. Finally, the last element to have in mind is the audience, i.e., the for whom.

Once the foundations of the evaluation process have been laid, based on the determination of the substantive axis, it is possible to construct the framework of the evaluation program, which will feature many free alternatives, but will be highly influenced by the second axis of the methodology. This axis allows us to answer the questions of who, how, and with what.

The methodological axis has, therefore, three distinct elements. The first concerns who should be evaluated, i.e. whether the evaluation should be external, internal, or mixed. The second element is whether the evaluation should be free, should be centered on the objectives, or should have descriptive or experimental character; this second element, therefore is about how to evaluate. Finally, the third element of this axis refers to what technology should be used (documentary analysis, observation, scales, etc.), and of course, attempts to answer the question of with what.

The methodological axis is the axis of conflict and controversy because, as Munici puts it (1992, p. 376), its elements are the cornerstones of all program evaluation. In the present paper we seek to give an answer to the proposals emanating from the methodological axis, in the concrete case of the evaluation of a teacher-training program on methods of cooperative learning.

III. Psychological investigation with models of structural equations

The analysis of variance developed by Fisher in 1925 marked a milestone in the study of causal relationships because they sought to determine the effect of an explanatory variable (independent) on an explained variable (dependent). It established in what measure the variation observed in the second, was due to changes made in the first.

Starting with this initial model, there were developed other statistical models that could be included under the heading of generic models for the analysis of relations of dependence, which share in the attempt to analyze the variation (variance) of the variables considered explained by others. These models commonly use simultaneous equations for the variables that can be considered endogenous or explained; therefore, they figure in the equations of the model and can be valuable as explanations in another moment of the sequence (Arnau and Guardia, 1990; Hall, 2007, Halpern and Pearl, 2005).

However, the need soon appeared for formulating models that were able not only to analyze the variation of an independent variable, but also the covariation among all the variables of the system (analysis of covariance). For this there was coined the term path analysis, with which there was designed a technique which permitted the decomposition of variances and covariances depending on the parameters of a system of simultaneous equations (Wright, 1934). This technique formed the bases that characterize the models for the analysis of relations of interdependence, within which are the models of structural equations.

In the behavioral sciences, the awareness that there are errors in our measurements (error of measurement), either accidental error, random, of observation or systematic; and the latent nature (not observable) of many variables of interest, enables the development of models for studying abstract concepts that are measured in an indirect way, and are called constructs. Of these, the most common are the exploratory factor analysis (Spearman, 1904) and confirmatory factor analysis (Jöreskog, 1969). In both, there are formalized the relations between observable variables (indicators) and latent variables (constructs or factors), and since all the observable variables must contribute to the measure of the construct, it is necessary to analyze the relationships of interdependence.

The dual necessity of considering, first, the relationship between indicators and constructs (model of measurement) and second, the relations of the constructs to one another (structural model), opened the way, in the decade of the 70s, for the configuration of the models now called structural equations (Goldberger and Duncan, 1973). These models include, as particular cases, all linear models (recursive and nonrecursive, with and without latent variables) and all models of factor analysis. It is not surprising, then, that during the past few years, “the study of structural models for the analysis matrices of covariance has allowed a significant increase in behavioral research” (Cudek, 1989, p. 317).

In our case, the necessity of verifying a hypothesis that reflects the intent to validate (or disprove) a teacher-training program requires the use of a causal model, since it contains in an explicit form, the idea of causation. Any causal phenomenon consists obviously of two parts: a cause and an effect. For the production of a cause/effect relationship, there must exist between the cause-variables-cause and effect-variables, a condition of temporal precedence of the first over the second, and some type of functional relation between them with a complete absence of the spurious. That is, the causal link between any type of phenomena, and in particular, between psychoeducational phenomena, is determined by a functional relation between variables, in such a way that the relationship can be symmetrical or asymmetrical. There are no unincluded causal antecedent variables, and there is a temporal precedence of the cause over the effect which, in symmetrical relations, can be understood if one assumes a mechanism of feedback which obeys this principle (Coenders, 2005).

In this way it is possible to establish functional equations between variables belonging to a theoretical model, which define a complete system of structural equations; that is, a system in which are included all the variables the theory postulates as relevant (closed-loop causal model). This assumes that we can prove the theory to be false, but we cannot verify it (Diez, 1997). Indeed, the social and behavioral sciences often confront entities or processes whose theory is relatively meager, at least when compared with the physiochemical sciences, and even with the natural sciences. Therefore, pure experimental observation often replaces pure statistical control, which requires explicit statement of all the variables involved in the study, and in which causal relationships are inferred from the observed relationships of the variables. Hence, although many of these variables tend to “move” together, i.e., they tend to show “covariance” or “correlation,” the study and analysis of such statistical relationships between these variables are not a sufficient condition for the existence of a causal relation between them.

Nevertheless, the utilization of complex statistical models can be of great help in establishing causal inferences. In this sense, models of structural equations constitute one of the most powerful tools for the study of causal relationships when these are of a linear type.

However, in spite of their sophistication, these models never prove causation, but they do permit the selection of relevant causal hypotheses, when those not supported by empirical evidence are discarded (Herbert, 1977). This is the beginning of the falsation (Popper, 1969) which has to do with what propositional logic knows as one of the tautologies of logic called modus Tollens, according to which, the antecedent of a condition can be denied if the consequence is denied; expressed in other words, a hypothesis would be rejected if we observe that the consequences of it are not observable. This implies that causal theories are prone to being statistically rejected or false if they are inconsistent with the data, or better said, if they contradict the covariances or correlations between variables, but cannot be statistically confirmed.

The application of the causal models involves carrying out a series of steps or operations, the first of which is the formulation of the model. The formulation of a causal model consists of describing the phenomenon to be studied, the variables involved, and the status of these within the model. Of course, this formulation must be grounded in the theory (or theories) which the experimenter (or experimenters) establish (es).

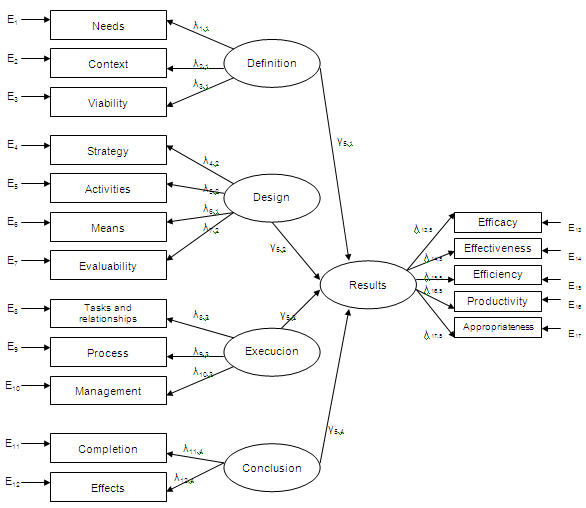

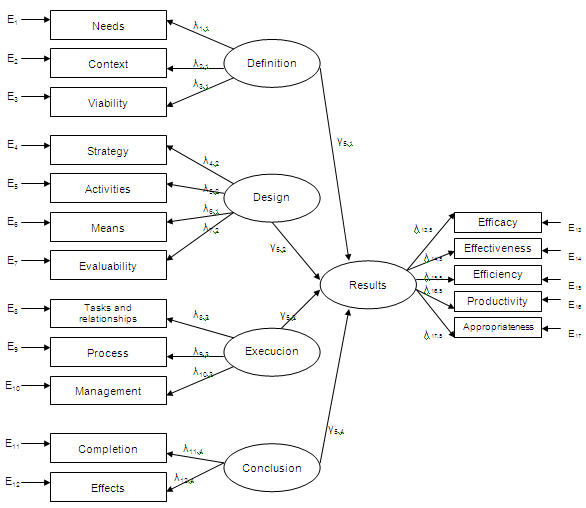

From this perspective we can formulate a causal model that would contain five latent variables, four independent (explicative or exogenous) and one dependent (explained or endogenous). The four latent exogenous variables are the basic segments of a program (definition, design, execution and conclusion), and underlie twelve observable variables (three for definition, four for design, three for execution, and two for conclusion).

3.1 Exogenous variables

3.1.1 Definition of the program

This refers to the needs that the project intends to meet, and to the identification of teachers who have such needs. However, people are immersed in specific contexts that influence their needs, and therefore, a good definition of the program presupposes an analysis of the context in which the teachers addressed are immersed. Finally, an element that is a priori, a necessary condition for the development and implementation of the program is its viability.

The first three observable variables attempt, therefore, to measure the construct of definition of a teacher-training program in methods of cooperative learning:

1) Needs. This variable will be understood both in the sense of needs assessment, and of needs evaluation. In it will be considered the analysis of the objectives of the needs search, the obstacles encountered, the conditions accepted, the risk components, and the management of the identification and selection of the needs. Its measurement, as mentioned in the introduction, will be effected based on three components:

a) Design, collection and treatment of the information (sources, procedures, instruments, techniques, management);

b) Identification of needs (issues raised, organization and structuring of information, results desired by the participating teachers, primary needs covering the training program, and needs not covered; secondary needs covering the training program; criteria established by the program developers for evaluating explicit and implicit proposals, establishment of priorities based on the proposals outlined by the teachers in training);

c) Valoration and selection of the needs (criteria and system for valorizing the needs identified; determining which are significant and which are not, and what consequences would result from not covering the needs; defining the system used to establish the criteria of importance and contrast of the needs and determining which will be included in the training program and which not).

2) Context. For the valoration of the context, we will start out from the general theory of systems and focus on an analysis of the system:

a) Physical (delimitation of physical variables that may influence the generation of problems detected, and that create the need for training).

b) Social (all the aspects that influence the socioeducational needs which produce the training program will be included).

c) Organizational (the proximal dimension of organization, focused on the institution or institutions that develop the project; and the distance dimension of organization, composed of the network of organizations that interact on the training program.).

d) Interpersonal relations (Considered here are all the loosely-structured and disconnected transactions that do not fit within the previous systems).

3) Viability. The evaluation of viability is an ex ante, and in the measurement model there will be analyzed:

a) Technical viability (valoration of compatibility between media to be used, teacher’s needs, program objectives and context problems. This has as much to do with the availability of physical and technological resources for developing the training program, as with the availability of a team of trainers who have mastered the technology for training teachers in methods of cooperative learning, and who are identified with the philosophy of change, and the existence of professionals from different educational levels who are willing to make a professional effort, leading to the acquisition of new skills and the modification of their work habits).

b) Social viability (is an affirmative answer to most of the following questions: “Does it meet real social needs? Is it a positive change for the program users, and for their students? Can social impacts be derived from the application of the program?” And so forth...)

c) Economic viability (determines the existence of a reliable general budget and a cash-flow adjusted to the available resources).

d) Management viability (valorizes whether the development of the training program is guaranteed from its beginning to its end, through the valoration of external relations, internal organization, management capability; it is, in short, an evaluation of the team of trainers).

3.1.2 Design

1) Strategy: The strategy is the course or organized action that the training program will use for achieving its objectives. In this variable, there will be valorized the process of creation and selection (rate of innovation of the institutions involved in the project, levels of instability and turbulence of the environment in which the program is developed, and which can affect the variables predicted by the designers and authors of the project, teamwork, etc.), and the type of focus (satisfying, optimizing or adaptive). In this variable, in spite of its being less commonly used in social science, we have opted for the adaptive focus because, in this case, what is most important is not the previously-determined product, but the process established using our training strategy. Given the level of uncertainty as to what may be the knowledge level of the teachers who will take part in the training process, we must evaluate their ability to react to a certain type of developments that could arise during the training process.

2) Activities: The structure of the activities will be measured taking into account the assignments, significant relations, assignment of their possibility of execution, products, time for carrying out activities, and appropriateness of the design.

3) Means: The evaluation of the means of support will be performed through the analysis of their consistency and compatibility; their appropriateness, availability and utilization. It distinguishes between physical, organizational, technological, human, cultural and regulatory environments.

4) Evaluability: To measure this aspect, the criteria of Berk and Rossi (1990) will be used: objectives, structure, components, recourses and prognosis regarding the consequences of not applying it.

3.1.3 Use

Three variables were used to configure the measurements:

1) Tasks and relationships: Evaluates the activities performed by the teachers to achieve the objectives and the manner of sequencing, determining hierarchy and connecting the tasks, by means of the persons implicated in the project.

2) Process: The evaluation of the process will focus on the human aspects, both of the tasks, and of the relations. Determined as indicators of behaviors will be those behaviors that that help or interfere in the manner of addressing and resolving the conflicts that inevitably occur in the process, etc.

3) Management: This variable will take in account five factors: the activation of the means of support, the activation of positive interpersonal relationships, the activation of the information and coordination of the project (internal and external); the help provided a team of specialists by the institutions involved and the integration of project components.

3.1.4 Conclusion

Regarding the last of the independent latent variables, two were observed:

1) Completion: Measures the operations for proving the proposals of the program, according to their accomplishment and the changes introduced.

2) Effects: Categorizes the products, the results, the impacts and the collateral effects.

3.2 Endogenous variables

3.2.1 Results

This construct is the latent variable, and there are five observable variables underlying it:

1) Efficacy: Degree to which objectives have been achieved in the population of teachers of different educational levels, measured in terms of a correct implementation of cooperative methodology in the classroom.

2) Effectivity: Degree to which the effects achieved differ from those achieved without the application of the training program, as compared with those obtained in classrooms of the same scholastic level where the program has not been developed because the faculty has not participated in the training program (equivalent control groups).

3) Efficiency: Evaluation of the cost/benefit and cost/effectiveness relationships (cost expressed as hours dedicated to the program and hours of preparation and planning by the teaching staff based on cooperative methodology).

4) Productivity: Set of relationships between the products and the inputs and means of support used (performance yield).

5) Appropriateness: Relationship between the elements of the project in which the human component (faculty of the participating institutions, teacher of the various levels of education involved in the training activity, etc.) constitutes the key to achieving these objectives.

It is clear that the greater part of these variables report categorical data; therefore, the model we propose is a causal model with categorical data (Hagenaars, 2002).

IV. Representation and specification of the model

Having once developed the theoretical conceptualization of the latent variables underlying the observable variables (and their interrelation), and with the aim of making explicit, in a simple manner, the causal priority of these variables (and their relationships), we undertook to show them by means of a pathway diagram (trajectories) which allows us to visualize the causal pathways that have been established in the different variables of the model (see Figure 2).

Figure 2. Pathway diagram

As previously mentioned, this model specifies the hypothetical relationships existing among our five constructs or latent variables (symbolized by ellipses): Definition (F1), Design (F2), Execution (F3), Conclusion (F4) and Results (F5). As indicated by the arrows F1, F2, F3, and F4 (exogenous variables) we may assume that they affect F5 (endogenous variable).

Because they are independent elements of the program, orthogonality between the exogenous variables is assumed; therefore, correlations between these variables have no causal connotations and can be estimated as 0

The magnitude of the causal relationships are expressed by the structural coefficients  and

and  in the model.

in the model.

Furthermore, there has been represented the residual D5 ( ), which is part of the variance of the endogenous latent variable that cannot be attributed to the constructs of the model.

), which is part of the variance of the endogenous latent variable that cannot be attributed to the constructs of the model.

This group of relationships make up the structural model we have established, and which has already been previously and sufficiently justified. But in addition, the structural model contains a measurement model composed of nine observable variables represented by two vectors (which we will designate as x and y, and which will be found symbolized by squares).

This set of relationships configure the structural model we have established and which has already been previously and amply justified. However, the structural model contains a measurement model consisting of nine observable variables represented by two vectors (which we will designate by x and y, and which are symbolized by squares).

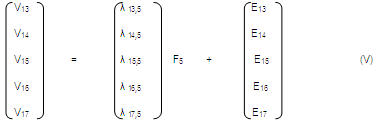

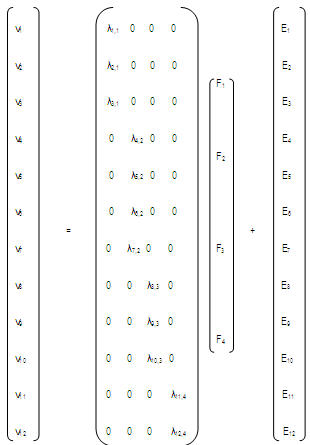

The vector y (v13, v14, v15, v16 and v17) is a vector of measurements for the dependent variables (endogenous), and the vector x (v1, v2, v3, v4, v5, v6, v7, v8, v9, v10, v11 and v12) is a vector of measurements for the independent variables (exogenous). The errors of measurement are represented, respectively, by the vectors  (E13, E14, E15, E16 y E17) y

(E13, E14, E15, E16 y E17) y  (E1, E2, E3, E4, E5, E6, E7, E8, E9, E10, E11 and E12).

(E1, E2, E3, E4, E5, E6, E7, E8, E9, E10, E11 and E12).

The coefficients or factorial saturations of the observable variables y on the latent variable F5 ( ) are represented by

) are represented by  , and those of the observable variables x on the latent variables F1, F2, F3 and F4 (y) have been symbolized by

, and those of the observable variables x on the latent variables F1, F2, F3 and F4 (y) have been symbolized by  .

.

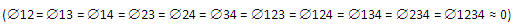

Once the relationships and the causal priority between the different variables has been established, the relationships were transferred to a system of structural equations.

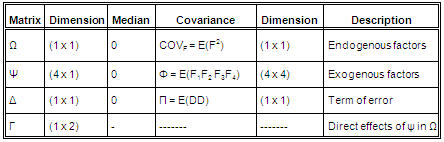

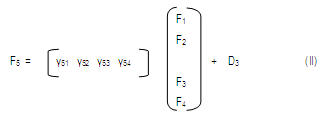

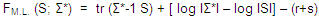

The structural equations of this model refer to the relations specified between the exogenous and endogenous variables, and are given by the following matricial expression:

Where  is a scalar which defines the latent endogenous variable, and is a vector (4 x 1) of latent exogenous variables,

is a scalar which defines the latent endogenous variable, and is a vector (4 x 1) of latent exogenous variables,  is a vector (1 x 4) of coefficients of the effects of the exogenous variables on the endogenous variables; finally,

is a vector (1 x 4) of coefficients of the effects of the exogenous variables on the endogenous variables; finally,  is a scalar which specifies the residual (or error) of the general equation (I).

is a scalar which specifies the residual (or error) of the general equation (I).

The development of this general equation would be as detailed in Figure 3.

Figure 3. Matricial development of the general equation

Let us assume that the medians of all variables is zero, that is to say, that the variables are expressed in units of deviation and the  and

and  do not correlate.

do not correlate.

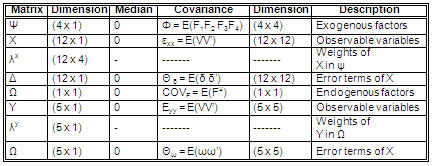

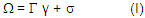

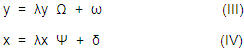

The measurement model can be transferred to two basic equations, whose expressions, in matrix terms, would be:

Where y is a vector (5 x 1) of measurements for the endogenous variable,  is a vector (5 x 1) of coefficients (factorial saturations) of y over the latent variables

is a vector (5 x 1) of coefficients (factorial saturations) of y over the latent variables  , and

, and  is a vector (5 x 1) of errors of measurements of y (Equation III); x is a vector (12 x 1) of measurements for the exogenous variables,

is a vector (5 x 1) of errors of measurements of y (Equation III); x is a vector (12 x 1) of measurements for the exogenous variables,  is a matrix (12 x 4) of coefficients (factorial saturations) of x over the latent variables

is a matrix (12 x 4) of coefficients (factorial saturations) of x over the latent variables  , and

, and  is a vector (12 x 1) of errors of measurement of x (Equation IV).

is a vector (12 x 1) of errors of measurement of x (Equation IV).

The matricial development of the equations (III and IV) will be as shown in Figures 4 and 5.

Figure 4. Matricial development of the measurement model of the dependent variable

Figure 5. Matricial development of the measurement model of the independent variables

The development we have just proposed complies with all the conditions established by Jöreskog and Sörbom (1978) for the matrix of covariance of the observed variables (always of the assumptions of LISREL).1 Indeed, our model will postulate seven matrices in function of which this matrix of covariance is structured:

1. The matrix of coefficients ( ) or saturations linking indicators of endogenous variables to endogenous latent variables (in our case a vector);

) or saturations linking indicators of endogenous variables to endogenous latent variables (in our case a vector);

2. The matrix of coefficients ( ) or factorial saturations linking the indicators of exogenous variables with the latent exogenous variables;

) or factorial saturations linking the indicators of exogenous variables with the latent exogenous variables;

3. The matrix ( ) of the effects of the latent exogenous variables on the latent endogenous variables (in our case a vector);

) of the effects of the latent exogenous variables on the latent endogenous variables (in our case a vector);

4. The matrix of variance-covariance ( ) of the latent exogenous variables (in our case a scalar);

) of the latent exogenous variables (in our case a scalar);

5. The matrix of variance-covariance ( ) of the residuals

) of the residuals  (in our case a scalar);

(in our case a scalar);

6. The matrix of variance-covariance of the measurement errors of the indicators  ;

;

7. The matrix of variance-covariance of the measurement errors of the indicators  .

.

This design has been chosen while bearing in mind the interests of the work situated in the verification or confirmation of the worth of a training program, and as we have previously stated, the structural models appear to be, from the epistemological point of view, a suitable method for dealing with this and for trying it out (Vega, 1985).

The analysis of the model was conducted with the program EQS Version 13 of the BMDP Statistical Software, and the estimate of the parameters was made using the maximum plausibility estimator of Maximum Likelihood (ML) because this estimator minimizes the function defined as:

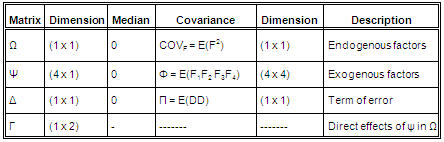

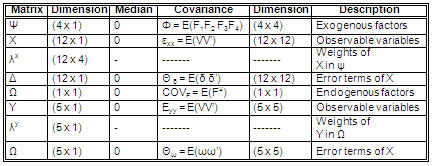

Thus, summary tables of the structural components and extent of the proposed model are the following:

a) Structural components of the model of covariance structures (see Table I)

Table I. Structural model

b) Components of the measurement model of covariance structures (see Table II)

Table II. Measurement model

V. Conclusions

Although since Tyler (1950, p. 69) defined the concept of educational evaluation in a teleocentric manner, this notion has gone through many and, at times, significant changes, everyone could admit that “the evaluation of training is one of the most relevant and meaningful activities in every planning process and the development of training” (Ruiz, 2007, p. 651). However, there appears to be a culture of evaluation among the trainers of trainers, which means that most teacher-training programs do not ordinarily provide the conceptual and procedural keys that enable the development of evaluation practices. In this sense, it provides a frame for this work, whose findings could be situated along three main axes.

First, the proposal of this work revolves around the need for training a body of teachers in the methods of cooperative learning. Indeed, from the retro-perspective of the twenty-first century, we can see that over the three last centuries, there has been a shift which has moved from the purest illustrated universalism which characterized Western thought in the eighteenth century, to a cultural relativism that, forged in the dawn of the nineteenth century, reached the last quarter of the twentieth century with renewed consistency.

Due to the planetarization of politics, economics and, above all, of culture, this situation has led to a rethinking of the dialectical relation between the particular and the universal, a rethinking that pursues a multicultural universalism which can give, simultaneously, a response to the identical and to the different. This brings into question the rationality of an educational system that is entrenched in poverty and a narrow sliver of sameness, while ignoring the enormous wealth in otherness and what is different. Therefore, if our leaders desire stability (global, national or regional), they should be aware of the Hegelian principle that “the identities that are not recognized by those who share life and destinations, are inherently unstable”. They must also be aware that if you want a policy leading to the achievement of these principles of integration without absorption, you must start with the basic element of any transformation: education.

In this sense, starting with the early work of Dewey, passing through the studies of Lewin and the substantive bases emanating from the theories of Piaget and Vygotsky, the process of peer interaction has become the most promising element on which should rest the organization of some classrooms which, increasingly, present a broad cognitive, social, ethnic and cultural heterogeneity.

Under the conditions of this process, beginning with the decade of the 70s, there have been developed methodologies based on a positive interdependence between the individual goals of the students, and the achievement of these, which depends on the quality of this interaction process. These methods of teaching are grouped under the common nomenclature of methods of cooperative learning.

Clearly, the application of a cooperative methodology in the classroom means training the teachers who have to develop it. For this reason, it is necessary to train a body of teachers from different levels of education in the skills needed for dealing successfully with this task.

The preparation of training programs for the teaching force—both the initial training (Dyson, 2001; Serrano Pons and Calvo, 2008) and the ongoing training (Emmer and Stough, 2001; Hawkes, 2000; and Pons Serrano, 2007 Serrano, Calvo, Pons, Lara and Moreno, 2008; Serrano Pons, Lara and Moreno, 2008; Solomon, 2000)—in the use of methodologies that allow the process of peer interaction is a promising line of research in the attempt at cooperative classroom organization (Hoy and Tschannen-Moran, 1999), which enables the development of integrated teacher-training projects such as SELA2 (Almog and Hertz-Lazarowitz, 1999).

Second, we propose here here a segmented preparation for teacher-training programs, because this type of formulation allows for a better evaluation of the effects of the program and its capability of providing:

A greater wealth of the elements that constitute the conceptual keys of the program, attempting to answer questions about what needs it is trying to meet, how can it meet the needs from a highly-contextualized perspective, and how it can be formulated in a way that will guarantee a priori its possibilities of being carried out;

A holistic procedural system, which needs to describe the strategic action that will make possible the achievement of the desired competencies, and that can be reached only through a highly-organized structure among activities, means and products, within a specific time frame;

A prognosis system regarding application vs. non-application, a sine qua non for its evaluability, which necessitates a classification of the products, the results, the impacts, and the collateral effects.

Third, and since they work with categorical variables,3 we propose here that the most appropriate structure for such evaluation is determined by the application of causal models. Moreover, we know that non-experimental research can approximate the experimental based on the use of structural equation models, by introducing into these the variables necessary to refute the relations between variables not derived from the theory.4 We also know that the models of structural equations constitute one of the most powerful tools for the study of causal relationships with non-quantitative data (Batista and Coenders, 2000). This has made it possible for these models to attain great popularity in the last three decades, and for eight important reasons:

1) One works with constructs that are measured through indicators, after which the quality of the measurement is evaluated;

2) Phenomena are seen in their true complexity (incorporating multiple endogenous and exogenous variables) and therefore, from a more realistic perspective and with a high level of ecological validity;

3) Measurement and prediction, analysis and factorial analysis of trajectories are considered together, which is to say that the effects of the latent variables on one another are evaluated without contamination due to the error of measurement;

4) Confirmatory perspective is introduced in the statistical model because the researcher must enter his/her theoretical knowledge into the model’s specifications before making the estimate;

5) The covariances observed (and not only the variances) are decomposed within a perspective of an analysis of the interdependence.

6) The quality of the measurement, the reliability and the validity of every indicator are evaluated;

7) The best indicators, including the modality of optimal response, are chosen;

8) The error of measurement and that of prediction are evaluated separately.

All this shows that, given the purpose of this work, models of structural equations become one of the best tools the trainer of trainers can use for evaluating his/her action/intervention.

References

Almog, T. & Hertz-Lazarowitz, R. (1999). Teachers as peer learner: Profesional development in an advanced computer learning environment. In A. M. O´Donnell &

A.King (Eds.), Cognitive perspectives on peer learning. The Rutgers Invitational Symposium on Education Series. (pp. 285-311). Mahwah, NJ: Lawrence Erlbaum Associates.

Alvira, F. (1991). Metodología de la evaluación de programas. Madrid: CIS.

Arnau, J. & Guardia, J. (1990). Diseños longitudinales en panel: Alternativa de análisis de datos mediante los sistemas de ecuaciones estructurales. Psicothema, 2 (1), 57-71.

Batista, J. M. & Coenders, G. (2000). Modelos de ecuaciones estructurales. Madrid: La Muralla.

Blalock, H. M. (1964). Causal inferences in non-experimental research. NY: Norton.

Berk, R. A. & Rossi, P. H. (1990). Thinking about program evaluation. Newbury Park, CA: Sage.

Calvo, M. T., Serrano, J. M., González-Herrero, M. E., & Ato, M. (1996). Formación de profesores: Una experiencia de elaboración y aplicación de métodos de aprendizaje cooperativo en las aulas de Educación Infantil, Primaria y Secundaria Obligatoria. Madrid: Ministerio de Educación.

Coenders, G. (2005). Temas avanzados en modelos de ecuaciones estructurales. Madrid: La Muralla.

Cronbach, L. J., Hambron, S. R., Dornbusch, S. M., Hess, R. D., Hornick, R. C., Phillips, D. C., et al. (1980). Towards reform in program evaluation: Aims, methods and institutional arrangements. San Francisco: Jossey-Bass.

Cudek, R. (1989). Analysis of correlation matrices using covariance structures, Psychological Bulletin, 105, 317–327.

Dettori, G., Giannetti, T., & Persico, D. (2006). SRL in Online Cooperative Learning: Implications for pre-service teacher training. European Journal of Education, 41 (3-4), 397-414.

Díez, J. (1997). Métodos de análisis causal. Madrid: Siglo XXI.

Dyson, B. (2001). Cooperative learning in an elementary physical education program. Journal of Teaching in Physical Education, 20 (3), 264-281.

Emmer, E. T. & Stough, L. M. (2001). Classroom management: A critical part of educational psychology, with implications for teacher education. Educational Psychologist, 36 (2), 103-112.

Goldberger, A. J. & Duncan, O. D. (Eds.) (1973). Structural equation models in the social sciences. NY: Academic Press.

Golightly, A., Nieuwoudt, H. D., & Richter, B. W. (2006). A Concept Model for Optimizing Contact Time in Geography Teacher Training Programs. Journal of Geography, 105 (5), 185-197.

Hagenaars, J.A. (2002). Directed loglinear modelling with latent variables: Causal models for categorical data with non-systematic and systematic measurement errors. In J. A. Hagenaars & A. L. McCutcheon (Eds.), Applied latent class analysis (pp. 234-286). Cambridge: Cambridge University Press.

Hall, N. (2007). Structural equations and causation. Philosophical Studies, 132 (1), 109-136.

Halpern, J. Y. & Pearl, J. (2005). Causes and explanations: A structural-model approach. II. Explanations. The British Journal for the Philosophy of Science, 56 (4), 889-911.

Hawkes, M. (2000). Structuring computer-mediated communication for collaborative teacher development. Journal of Research and Development in Education, 33 (4); 268-277.

Herbert, B. A. (1977). Causal modeling. London: Sage.

Hoy, A. W. & Tschannen-Moran, M. (1999). Implications of cognitive approaches to peer learning for teacher education. In A. M. O´Donnell & A. King (Eds.), Cognitive perspectives on peer learning. The Rutgers Invitational Symposium on Education Series (pp. 257-284). Mahwah, NJ: Lawrence Erlbaum Associates.

Ibarra, O. H., Leininger, B. S., & Rosier, L. E. (1984). A note on the complexity of program evaluation. Mathematical Systems Theory, 17 (2), 85-96.

Ichimura, H. & Linton, O. (2005). Asymptotic expansions for some semiparametric program evaluation estimators. Cambridge: Cambridge University Press.

Johnson, D. W. & Johnson, R. T. (1990). Cooperation and competition: Theory and Research. Hillsdale, NJ: Lawrence Erlbaum Associates.

Jones, B. F. (1992). Cognitive designs in instruction. In M. C. Alkin (Ed.), Encyclopaedia of Educational Research (pp. 166-178). NY: Macmillan.

Jöreskog, K. G. (1969). A general approach to confirmatory maximum likelihood factor analysis. Psychometrika, 34, 183-202.

Jöreskog, K. G. & Sörbom, D. (1978). LISREL 6: A general computer program for estimation of a linear structural equation system by maximun likelihood methods. Chicago: National Educational Resources.

Jöreskog, K. G. & Sörbom, D. (1996). LISREL 8: Structural equation modeling with the simplis command language. Hove and London: Scientific Software International.

Jöreskog, K. G. & Van Thillo, M. (1972). LISREL: A General Computer Program for Estimating a Linear Structural Equation System Involving Multiple Indicators of Unmeasured Variables (Uppsala Research Report 73-5). Uppsala: Uppsala University.

Kagan, S. (1985). Dimensions of cooperative classroom structures. In R. Slavin, S. Sharan, S. Kagan, R. Hertz Lazarowitz, C. Webb, & R. Schmuck (Eds.), Learning to cooperate, cooperating to learn (pp. 67-96). NY: Plenum Press.

Leikin, R. (2004). The wholes that are greater than the sum of their parts: employing cooperative learning in mathematics teachers’ education. The Journal of Mathematical Behavior, 23 (2), 223-256.

McDonald, R. P. (1980). A simple comprehensive model for the analysis of covariance structures: Some remarks on applications. British Journal of Mathematical and Statistical Psychology, 33, 161-183.

Municio, P. (1992). La evaluación segmentada de programas. Bordón, 43 (4). 375-395.

Popper, K. (1969). Búsqueda sin término. Barcelona: Alianza Editorial.

Posavac, E. J. & Carey, R. G. (1985). Program evaluation: Methods and case studies. Englewood Cliffs, NJ: Prentice-Hall.

Ruiz, C. (2007). Evaluación de la formación. In J. Tejada & V. Giménez (Comps.), Formación de formadores. Vol. 1: Escenario Aula (pp. 647-701). Madrid: Thomson Editores.

Scriven, M. (1967). The Theory behind Practical Evaluation. Evaluation, 2 (4), 393-404.

Serrano, J. M. & Calvo, M. T. (1994). Aprendizaje cooperativo. Técnicas y análisis dimensional. Murcia: Servicio de Publicaciones de la Universidad de Murcia.

Serrano, J. M., Calvo, M. T., Pons, R. M., Moreno, T., & Lara, R. S. (January, 2008). Training teachers in cooperative learning methods. Paper presented at Cooperative Learning in Multicultural Socities: Critical Reflections. Torino, Italia.

Serrano, J. M. & González-Herrero, M. E. (1996). Cooperar para aprender. ¿Cómo implementar el aprendizaje cooperativo en el aula?. Murcia: DM Editores.

Serrano, J. M. & Pons, R. M. (2006). El diseño de la instrucción. In J. M. Serrano (Comp.), Psicología de la Instrucción. Vol.II: El diseño instruccional (pp. 17-71). Murcia: DM Editores.

Serrano, J. M. & Pons, R. M. (2007). Formación de profesores en Métodos de Aprendizaje Cooperativo. In T. Aguado, I. Gil, & P. Mata (Comps.), Formación del profesorado y práctica escolar (pp. 20-32). Madrid: Universidad Nacional de Educación a Distancia.

Serrano, J. M. & Pons, R. M. (2008). Hacia un nuevo replanteamiento de la unidad de análisis del constructivismo. Revista Mexicana de Investigación Educativa, 13 (38), 681-712.

Serrano, J. M., Pons, R. M., & Calvo, M. T. (January, 2008). Reward structure as a tool to generate positive interdependence in higher education. Paper presented at Cooperative Learning in Multicultural Socities: Critical Reflections. Torino, Italia.

Serrano, J. M., Pons, R. M., Moreno, T., & Lara, R. S. (2008). Formación de profesores en MAC (Research Report). Madrid: Agencia Española de Cooperación Internacional.

Serrano, J. M., Pons, R. M., & Ruiz, M. G. (2007). Perspectiva histórica del aprendizaje cooperativo: un largo y tortuoso camino a través de cuatro siglos. Revista Española de Pedagogía, 236, 125-138.

Solomon, R. P. (2000). Exploring cross-race dyad partnerships in learning to teach. Teachers College Record, 102 (6); 953-979.

Spearman, C. (1904). General intelligence, objectively determined and measured. American Journal of Psychology, 15, 201-293.

Stake, R. E. (1998). Advances in program evaluation (Vol. 4). London: JAI Press.

Steiger, J. H. (1989). EZPATH: A supplementary module for SYSTAT and SYGRAPH. Evanston, IL: SYSTAT.

Stufflebeam, D. L. (2001a). Evaluation models. New directions for program evaluation. San Francisco, CA: Jossey-Bass.

Stufflebeam, D. L. (2001b). Evaluation checklists: Practical tools for guiding and judging evaluations. American Journal of Evaluation, 22 (1), 71-79.

Suchman, E. A. (1967). Evaluative research: Principles and practice in public service and social action programs. NY: Russell Sage Foundation.

Suchman, E. A. (1990). The social experiment and the future of evaluative research. American Journal of Evaluation, 11 (3), 251-259.

Talmage, H. (1982). Evaluation of programs. In H. M. Mitzel (Ed.), Encyclopedia of Educational Research (592-611). NY: Macmillan.

Tyler, R. W. (1950). Basic principles of curriculum and instruction. Chicago: University of Chicago Press.

Vega, J. L. (1985). Metodología longitudinal. In A. Marchesi, M. Carretero & J. Palacios (Comps.), Psicología Evolutiva. Vol 1: Teorías y métodos (pp. 369-396). Madrid: Alianza.

Wang, M. C. & Walberg, H. J. (1987). Evaluating educational programs: An integrative, causal modeling approach. In D. S. Cordray & M. W. Lipsey (Eds.), Evaluation Studies: Review Annual (pp. 534-553). Thousand Oaks, CA: Sage.

Worthen, B. R. (1990). Programs Evaluation. In H. J. Walberg & G. D. Haertel (Eds.), The International Enyclopedia of Educational Evaluation (42-47). Oxford: Pergamon Press.

Wright, S. (1934). The method of path coefficients. Annals of Mathematical Statistics, 5, 161-215

Translator: Lessie Evona York Weatherman

UABC Mexicali

1These are the initials of the abbreviations of Linear Structural Relationship, a program developed by Jöreskog and Thillo in 1972, and which has caused the greatest impact in the development of models of structural equations. Since the first program LISREL, there have appeared different versions and updates of it (Jöreskog and Sörbom (1996), along with other programs such as the Covariance Structure Analysis (COSAN), developed by R. McDonald in 1980; the Structural Equations Programs (EQS), developed by P.M. Bentler in 1984; or the EZPATH, developed by J.H. Steiger in 1989 as a supplementary module for SYSTAT and the SYGRAPH. Today, there are highly-advanced versions of these programs, and almost all statistical packages perform the analysis of structural equations.

2Initials in Hebrew for ‘Learning environments with advanced technologies

3Based on the publication of Blalock’s book (1964), Causal inferences in non-experimental research, the approach to causation of categorical data is opened.

4The theory also points out the temporal order of variables (the use of longitudinal designs).

Please cite the source as:

Serrano, J. M., Moreno, T., Pons R. M., & Lara, R. S. (2008). Evaluation of teacher-training programs in cooperative learning methods, based on an analysis of structural equations. Revista Electrónica de Investigación Educativa, 10 (2). Retrieved month day, year, from: http://redie.uabc.mx/vol10no2/contents-serranomoreno.html